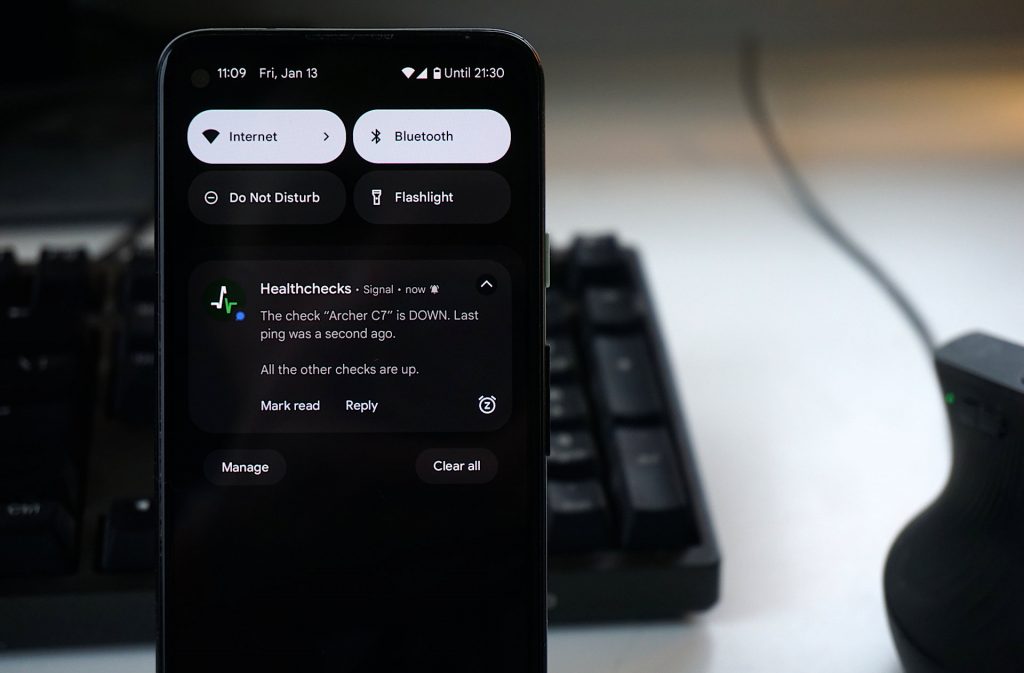

When a cron job does not run on time, Healthchecks can notify you using various methods. One of the supported methods is Signal messages. Signal is an end-to-end encrypted messenger app run by a non-profit Signal Foundation. Signal’s mobile client, desktop client, and server are free and open-source software (with some exceptions–read on!).

No Incoming Webhooks

Unlike most other instant messaging services, Signal does not provide incoming webhooks for posting messages in chats. If you want to send messages on the Signal network, you must run a full client, and follow all the same cryptographic protocols that normal end-user clients follow. This is inconvenient for the integration developer but makes sense: the main feature of Signal is strong cryptography and as little as possible sharing of information with the Signal servers. The servers pass around messages, and help with peer discovery, but (as far as I know) cannot send their own messages on the user’s behalf. Official incoming webhooks would conflict with the overall architecture of the system.

signal-cli

signal-cli is a third-party open-source Signal client. It uses the same signal client libary that the official clients use but offers a programmatic interface for sending and receiving messages. signal-cli supports command-line, DBUS, and JSON-RPC interfaces.

Signal’s official position on the signal-cli client seems to be–they do not support it, but they also have not explicitly banned it. When I asked Signal Support about their stance regarding signal-cli (and also about advice regarding rate-limit issues discussed below), I got just this short response back:

Due to our limitations as a non-profit organization, we can only provide support for the product we provide. Signal-cli is not provided or maintained by us, therefore we cannot provide any support for it.

Using signal-cli in Healthchecks

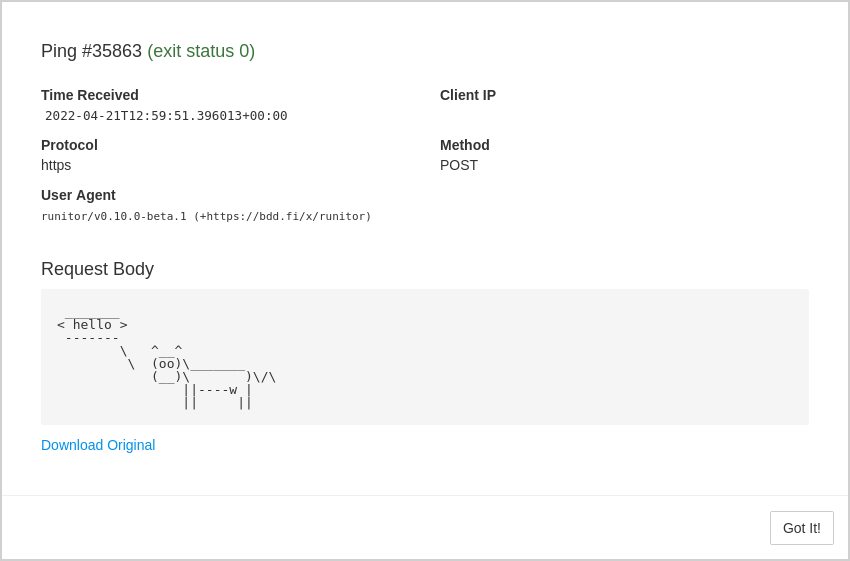

I coded the initial Signal integration in January 2021. To send messages, it was running a signal-cli send -m 'text goes here' command for every message. Each send took a minimum of one second, as every signal-cli invocation was initializing JVM, and initializing network connections, just to do one small send operation. A more efficient approach was to run signal-cli in daemon mode and talk to it via DBUS or JSON-RPC.

Also in January 2021, I upgraded the integration to talk to signal-cli over DBUS. This took some tinkering to figure out the DBUS interface configuration and to get python code to talk to it. But it worked, and message delivery was now much quicker.

In December 2021, signal-cli added the JSON-RPC interface, and I switched the Healthchecks integration to it. Again, it took a fair bit of tinkering and support from the signal-cli author until I figured out how it all hangs together, how to read and write messages over a UNIX socket, and how to interpret them. There were two important improvements over the previous DBUS code:

- Simpler operations: I did not need the DBUS service with its associated configuration files anymore.

- The Healthchecks project did not need the “dbus-python” dependency anymore.

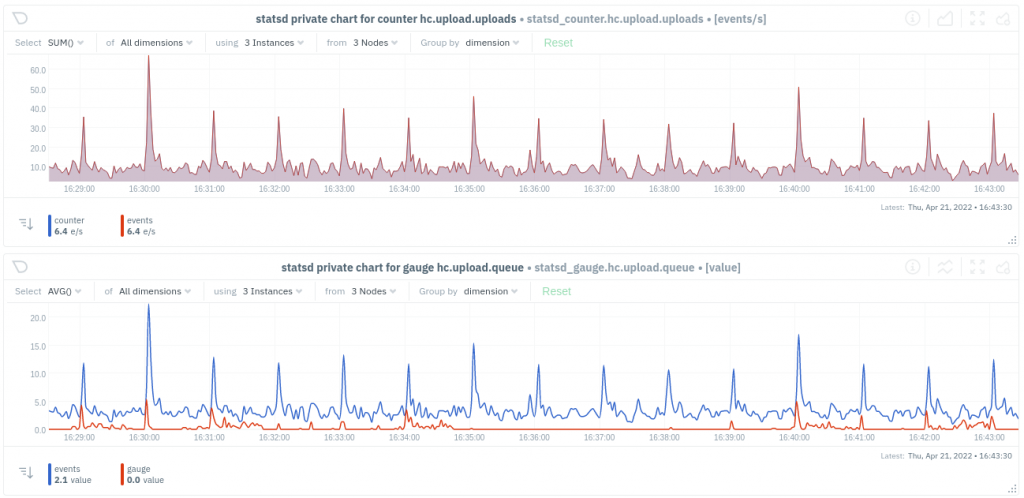

Rate limiting and CAPTCHAs

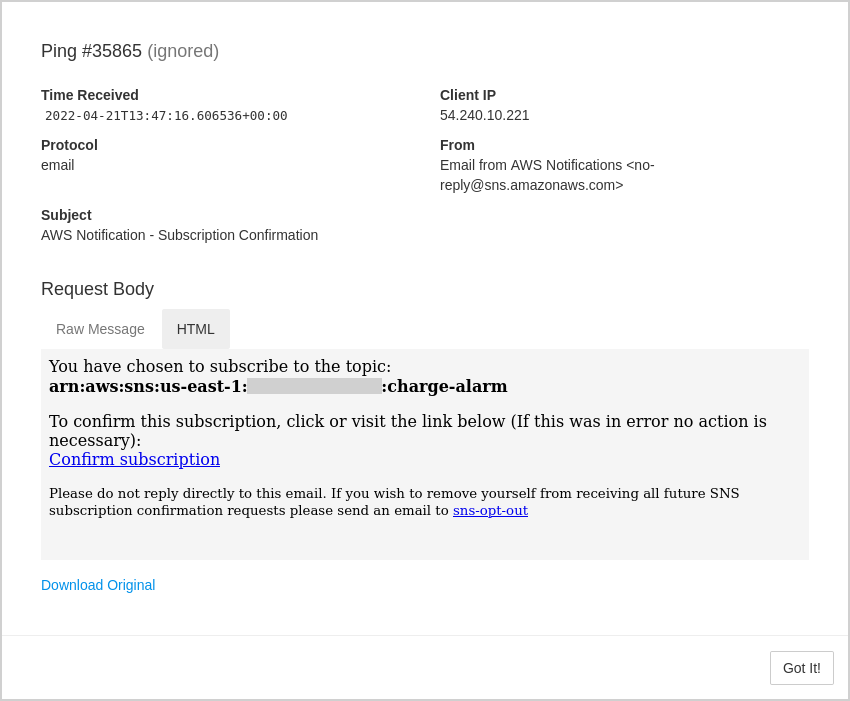

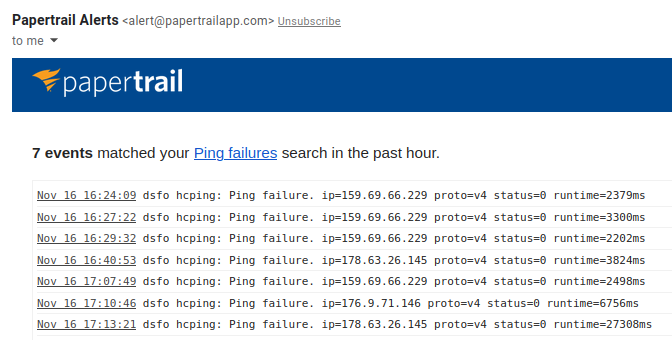

Around April 2022 I started to notice that some send operations were failing with an error message asking to solve a CAPTCHA challenge. These errors were infrequent at first and seemed to only affect the very first messages to new recipients. I added code to email me the CAPTCHA challenges, and I added a crude command-line utility to submit the CAPTCHA solutions. As the CAPTCHA challenges came in, I manually solved and submitted them. Signal was using Google reCAPTCHA, and I got plenty of opportunities to demonstrate my intelligence by expertly clicking on fire hydrants, crosswalks, and traffic lights. Sometimes at odd hours, sometimes roadside over a mobile hotspot.

As the frequency of CAPTCHAs gradually increased, I tried to make solving them less annoying:

- I figured out that being logged in gmail.com helps the CAPTCHA solving a lot. Usually just a single click, no fire hydrants.

- I set up my computer to automatically put the CAPTCHA solution in the clipboard.

- I made a web form for submitting CAPTCHA solutions. No need to fire up the terminal, just click a link in the email, and paste the solution.

Now solving a CAPTCHA challenge took just a few clicks, but the end-user experience was still not great. For some users, Signal notifications would not work until I showed up and solved yet another CAPTCHA. I did some spelunking in the signal-server code base. There is a class listing various rate-limiters and their parameters. For any rate limiter, I could trace back where and how it was used. But I still could not pinpoint the piece of code that triggers the specific rate-limit errors I was seeing. Signal-Server has an “abusive-message-filter” module, which is private code, perhaps the logic lives there.

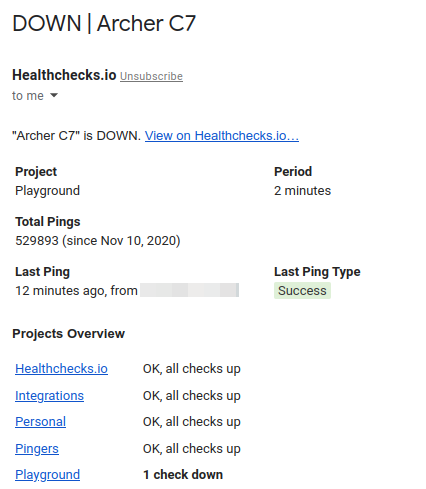

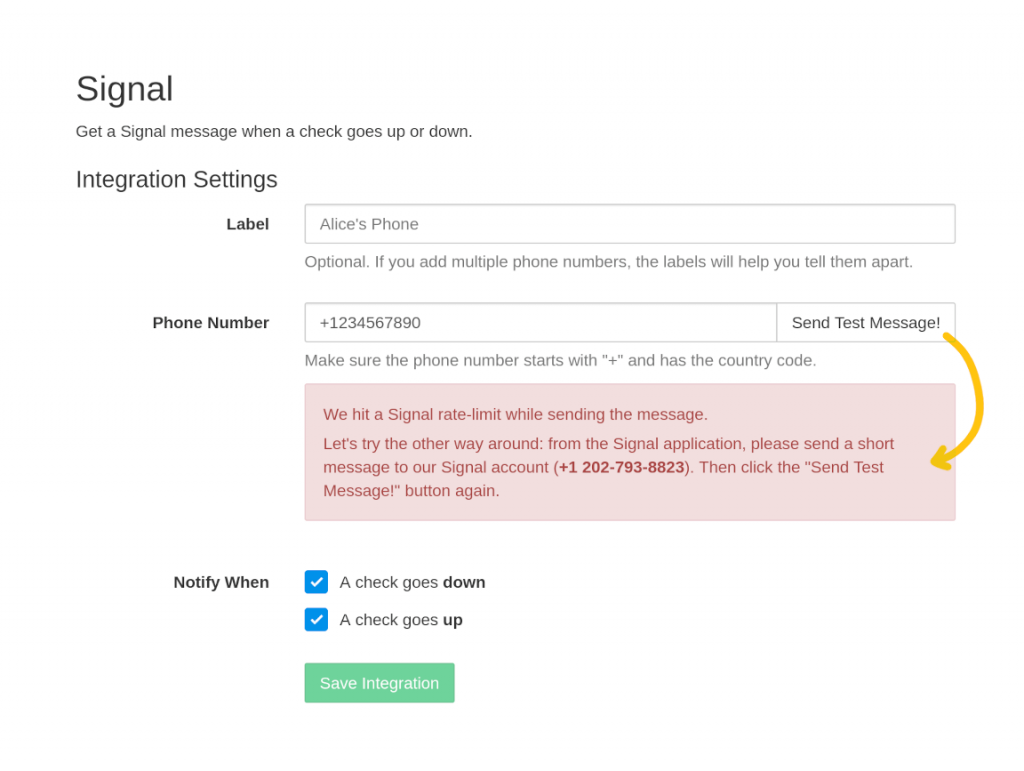

It seemed only the initial messages to new recipients were triggering rate-limit errors. After a single message got through, the following messages would work with no issues. So my next idea was to change the Signal integration onboarding flow:

- After the user has entered the phone number of the Signal recipient, ask them to send a test message

- If the test message generates a rate-limit error, ask the user to initiate a conversation with us from their side, then try again:

My working theory is that users initiating the conversation with Healthchecks will look less abusive to Signal’s abusive message filter, and will help avoid hitting rate limits. But, if the theory fails and we still get rate-limit errors, at least the users will not create dysfunctional integrations (the “Save Integration” button becomes available only after successfully sending a test message).

In Summary

In summary, Healthchecks uses signal-cli to send Signal messages. It talks to signal-cli over JSON-RPC. To avoid rate limits, it asks the user to send the first message from their end. Building and maintaining the Signal integration has taken more effort than any other integration. But that is fine and, aside from the manual CAPTCHA solving, time well spent. I’m glad Healthchecks supports it, and I’m happy to see that the Signal integration is popular among Healthchecks.io users.

Happy monitoring and messaging,

Pēteris