I recently found out Travis CI is ending its free-for-opensource offering, and looked at the alternatives. I recently got badly burned by giving an external CI service access to my repositories, so I am now wary of giving any service any access to important accounts. Github Actions, being a part of Github, therefore looked attractive to me.

I had no experience with Github Actions going in. I have now spent maybe 4 hours total tinkering with it. So take this as “first impressions,” not “this is how you should do it.” I’m a complete newbie to Github Actions, and it is just fun to write about things you have just discovered and are starting to learn.

My objective is to run the Django test suite on every commit. Ideally, run it multiple times with different combinations of Python versions (3.6, 3.7, 3.8) and database backends (SQLite, PostgreSQL, MySQL). I found a starter template, added it in .github/workflows/django.yml, pushed the changes, and it almost worked!

The initial workflow definition:

name: Django CI

on:

push:

branches: [ master ]

pull_request:

branches: [ master ]

jobs:

build:

runs-on: ubuntu-latest

strategy:

max-parallel: 4

matrix:

python-version: [3.6, 3.7, 3.8]

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

uses: actions/setup-python@v2

with:

python-version: ${{ matrix.python-version }}

- name: Install Dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Run Tests

run: |

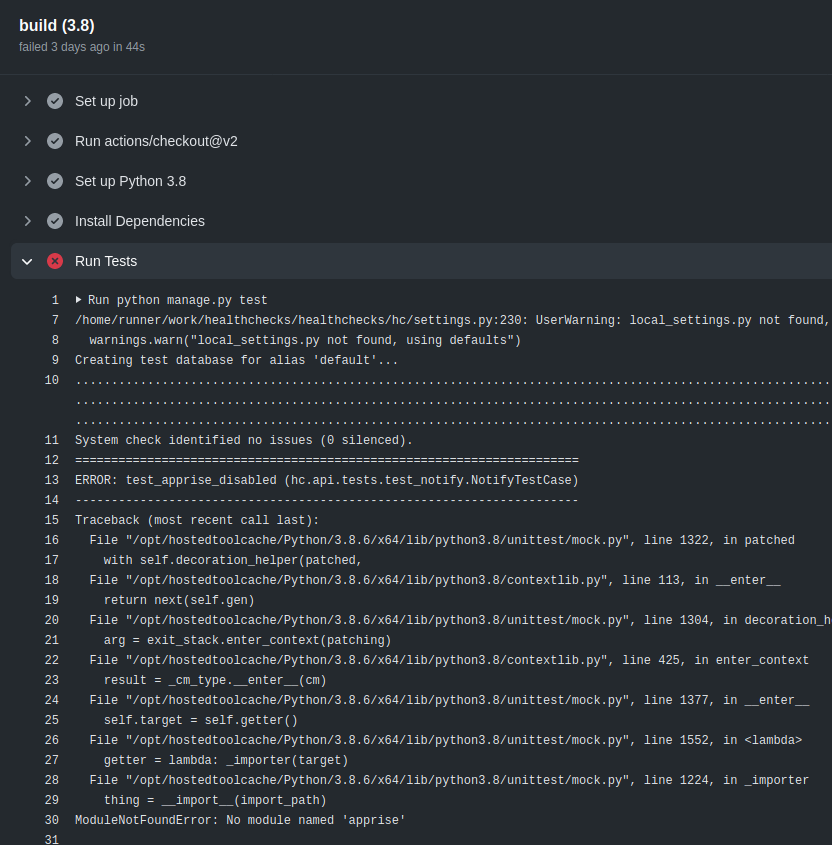

python manage.py testAnd the results:

Everything looked almost good except for a couple missing dependencies. A couple of dependencies for optional features (apprise, braintree, mysqlclient) are not listed in requirements.txt, but are needed for running the full test suite. After adding an extra “pip install” line in the workflow, the tests ran with no issues.

Adding Databases

If you run the Healthchecks test suite with its default configuration, it uses SQLite as the database backend which usually Just Works. You can tell Healthchecks to use PostgreSQL or MySQL backends instead by setting environment variables.

Looking at Github Actions documentation suggested I should use service containers. By using special syntax, you tell Github Actions to start a database in a Docker container before the the rest of the workflow execution starts. You then pass environment variables with the database credentials (host, port, username, password) to Healthchecks. It took me a few failed attempts to get running, but I got it figured out relatively quickly:

name: Django CI

on:

push:

branches: [ master ]

pull_request:

branches: [ master ]

jobs:

build:

runs-on: ubuntu-20.04

strategy:

max-parallel: 4

matrix:

db: [sqlite, postgres, mysql]

python-version: [3.6, 3.7, 3.8]

include:

- db: postgres

db_port: 5432

- db: mysql

db_port: 3306

services:

postgres:

image: postgres:10

env:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: hunter2

options: >-

--health-cmd pg_isready

--health-interval 10s

--health-timeout 5s

--health-retries 5

ports:

- 5432:5432

mysql:

image: mysql:5.7

env:

MYSQL_ROOT_PASSWORD: hunter2

ports:

- 3306:3306

options: >-

--health-cmd="mysqladmin ping"

--health-interval=10s

--health-timeout=5s

--health-retries=3

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

uses: actions/setup-python@v2

with:

python-version: ${{ matrix.python-version }}

- name: Install Dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

pip install braintree mysqlclient apprise

- name: Run Tests

env:

DB: ${{ matrix.db }}

DB_HOST: 127.0.0.1

DB_PORT: ${{ matrix.db_port }}

DB_PASSWORD: hunter2

run: |

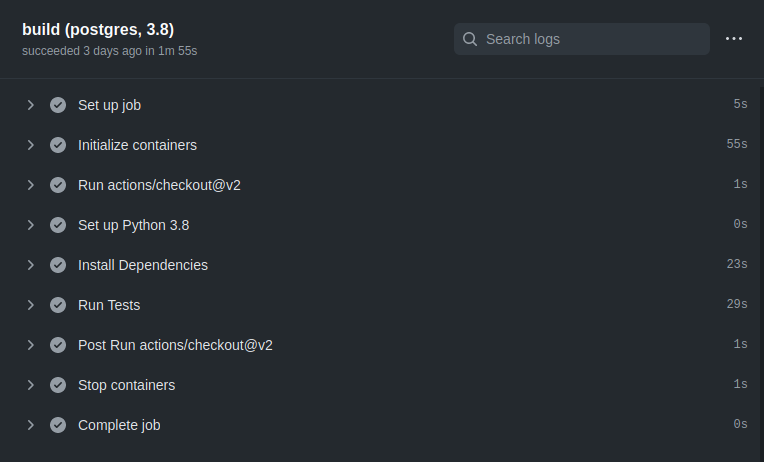

python manage.py testAnd here is the timing of a sample run:

Making It Quick

One thing that bugged me was the database containers took around one minute to initialize. Additionally, both the PostgreSQL and MySQL would initialize on all jobs, even the jobs only needing SQLite. This is not a huge issue, but, my inner hacker still wanted to see if the workflow can be made more efficient. With a little research, I found the Github Actions runner images come with various preinstalled software. For example, the “ubuntu-20.04” image I was using has both MySQL 8.0.22 and PostgreSQL 13.1 preinstalled. If you are not picky about database versions, these could be good enough.

I also soon found the install scripts Github uses to install and configure the extra software. For example, this is the script used for installing postgres. One useful piece of information I got from looking at the script is: it does not set up any default passwords and does not make any changes to pg_hba.conf. Therefore I would need to take care of setting up authentication myself.

I dropped the services section and added new steps for starting the preinstalled databases. I used the if conditionals to only start the databases when needed:

name: Django CI

on:

push:

branches: [ master ]

pull_request:

branches: [ master ]

jobs:

test:

runs-on: ubuntu-20.04

strategy:

matrix:

db: [sqlite, postgres, mysql]

python-version: [3.6, 3.7, 3.8]

include:

- db: postgres

db_user: runner

db_password: ''

- db: mysql

db_user: root

db_password: root

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

uses: actions/setup-python@v2

with:

python-version: ${{ matrix.python-version }}

- name: Start MySQL

if: matrix.db == 'mysql'

run: sudo systemctl start mysql.service

- name: Start PostgreSQL

if: matrix.db == 'postgres'

run: |

sudo systemctl start postgresql.service

sudo -u postgres createuser -s runner

- name: Install Dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

pip install apprise braintree coverage coveralls mysqlclient

- name: Run Tests

env:

DB: ${{ matrix.db }}

DB_USER: ${{ matrix.db_user }}

DB_PASSWORD: ${{ matrix.db_password }}

run: |

coverage run --omit=*/tests/* --source=hc manage.py test

- name: Coveralls

if: matrix.db == 'postgres' && matrix.python-version == '3.8'

run: coveralls

env:

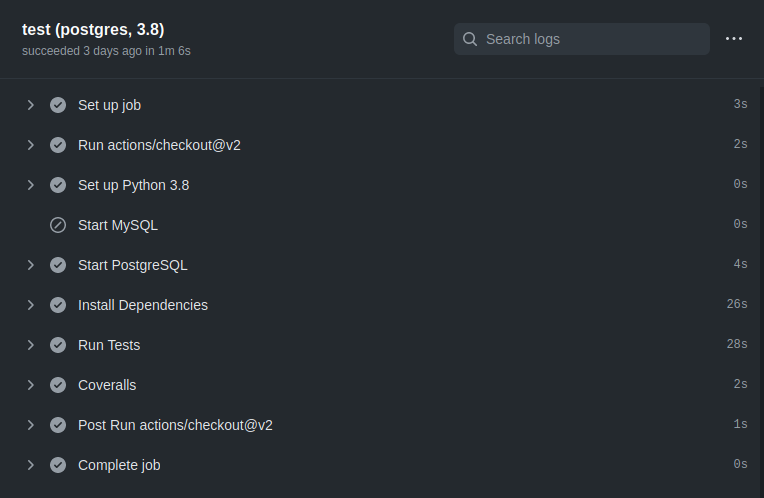

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}And the resulting timing:

There is more time to be gained by optimizing the “Install Dependencies” step. Github Actions has a cache action which caches specific filesystem paths between job runs. One could figure out the precise location where pip installs packages and cache it. But this is where I decided it was “good enough” and merged the workflow configuration into the main Healthchecks repository.

In summary, first impressions: messing with Github Actions is good fun. The workflow syntax documentation is good to get a quick idea of what is possible. The workflow definition ends up being longer than the old Travis configuration, but I think the extra flexibility is worth it.